The role of image processing in smartphone camera sensors is paramount to the exceptional image quality we now take for granted. From the humble beginnings of basic image capture, advancements in sensor technology, computational photography, and sophisticated algorithms have revolutionized the mobile photography experience. This exploration delves into the intricate interplay between hardware and software, unveiling the secrets behind the stunning images produced by modern smartphones.

We will examine the evolution of image sensor technology, comparing CMOS and CCD sensors and their impact on image quality. The crucial role of computational photography techniques like HDR, noise reduction, and super-resolution will be detailed, along with the increasingly important contributions of artificial intelligence. We’ll also investigate the image signal processor (ISP), image enhancement algorithms, and various image stabilization methods, highlighting their individual and collective contributions to the final image.

Image Sensor Technology in Smartphones

The evolution of smartphone cameras has been nothing short of remarkable, driven largely by advancements in image sensor technology. From relatively low-resolution sensors capturing grainy images just a decade ago, we’ve progressed to sensors capable of producing stunning, high-resolution photos and videos comparable to professional-grade cameras. This progress is a testament to the miniaturization and performance improvements in sensor technology, alongside advancements in computational photography.

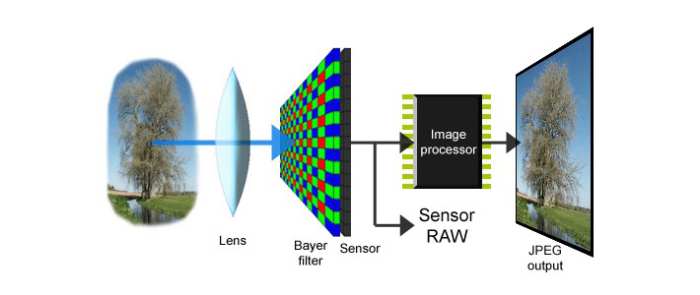

The quality of a smartphone photograph hinges significantly on the underlying image sensor. This component captures light and converts it into digital data, forming the raw material for image processing. Understanding the technology behind these sensors is crucial to appreciating the leaps and bounds made in smartphone photography.

Evolution of Smartphone Image Sensor Technology

Early smartphone cameras utilized relatively small and low-resolution sensors, resulting in images with limited detail and poor low-light performance. However, continuous innovation has led to significant improvements in sensor size, pixel density, and light sensitivity. The introduction of backside-illuminated (BSI) sensors was a pivotal moment, allowing for increased light capture and improved low-light performance. Subsequently, advancements in pixel technology, such as the development of larger pixels and more sophisticated photodiode designs, further enhanced image quality. More recently, the integration of multi-camera systems, featuring sensors with varying focal lengths and capabilities, has broadened the creative possibilities of smartphone photography. For instance, the transition from 8MP sensors to 108MP and beyond signifies a dramatic increase in detail capture.

Comparison of CMOS and CCD Image Sensors

Two primary types of image sensors dominate the smartphone market: CMOS (Complementary Metal-Oxide-Semiconductor) and CCD (Charge-Coupled Device). While CCD sensors were prevalent in early digital cameras, CMOS sensors have largely superseded them in smartphones due to their superior power efficiency, lower manufacturing cost, and integration with on-chip processing capabilities. CCD sensors, known for their high image quality, consume significantly more power and are more expensive to manufacture, making them less suitable for the power-constrained environment of smartphones. The on-chip processing capabilities of CMOS sensors also allow for faster image capture and processing, crucial for features like burst mode and video recording.

Physical Characteristics of Smartphone Image Sensors and Their Impact on Image Quality

The physical characteristics of a smartphone image sensor play a critical role in determining the overall image quality. Sensor size, directly related to the area of the sensor capturing light, significantly impacts light gathering ability and dynamic range. Larger sensors generally perform better in low-light conditions and offer improved depth of field control. Pixel size also influences image quality. Larger pixels can capture more light, leading to improved low-light performance and reduced noise. However, larger pixels often mean fewer pixels overall for a given sensor size, potentially impacting resolution. The arrangement and shape of pixels (e.g., square, rectangular) can also affect image quality, with some arrangements offering better color reproduction or light sensitivity. For example, a sensor with larger pixels (e.g., 1.8µm) will typically outperform one with smaller pixels (e.g., 1.0µm) in low-light scenarios, producing less noisy images with better detail preservation. The number of pixels (resolution) determines the level of detail captured, though it is not the sole determinant of image quality. High resolution combined with smaller pixels can result in images that appear sharp but are prone to noise in low light.

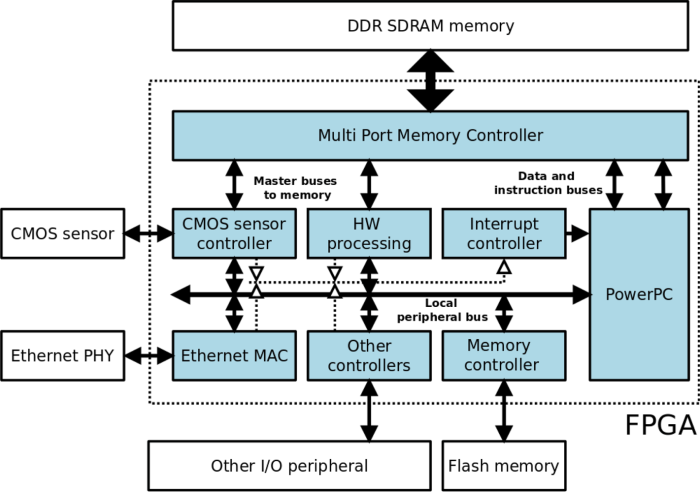

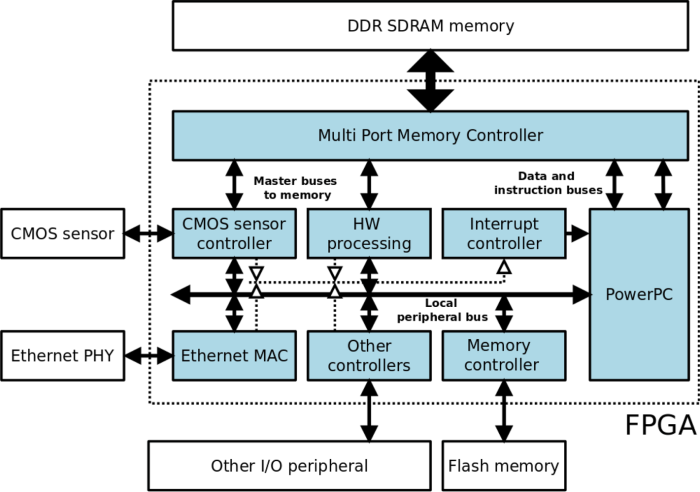

Image Signal Processing (ISP)

The Image Signal Processor (ISP) is the unsung hero of smartphone photography. It takes the raw data from the image sensor – a complex array of electrical signals – and transforms it into the vibrant, detailed images we see on our screens. Without a sophisticated ISP, even the best sensor would produce dull, noisy, and inaccurate pictures. The ISP’s role is crucial in bridging the gap between raw sensor data and a visually appealing photograph.

The ISP performs a series of complex calculations and algorithms to enhance the image quality. This process is multifaceted and involves several key stages, each contributing to the final output. The efficiency and sophistication of these stages directly impact the overall image quality and features available to the user.

Stages of Image Processing within the ISP

The transformation of raw sensor data into a final image involves a multi-stage pipeline. These stages work sequentially, each building upon the results of the previous one. While specific implementations vary across manufacturers, the core functionalities remain consistent.

- Demosaicing: The sensor captures raw data in a Bayer pattern, where each pixel only records one color channel (red, green, or blue). Demosaicing interpolates the missing color information to create a full-color image. Algorithms used here can significantly affect the sharpness and color accuracy of the final image.

- Defect Correction: This stage identifies and corrects defects in the sensor data, such as “hot” or “dead” pixels that produce incorrect or no signal respectively. This ensures a clean and consistent image base.

- White Balance: Different light sources have different color temperatures. White balance adjusts the color balance of the image to make white objects appear white, regardless of the lighting conditions. This is crucial for achieving natural-looking colors.

- Color Correction: This stage refines the color accuracy further, correcting for inconsistencies in the sensor’s response to different wavelengths of light. Advanced ISPs may use sophisticated color profiles to achieve more accurate and pleasing colors.

- Noise Reduction: Image sensors inevitably introduce noise, which manifests as graininess or speckles. Noise reduction algorithms attempt to minimize this noise without sacrificing too much detail. The balance between noise reduction and detail preservation is a key challenge.

- Sharpness Enhancement: This stage enhances the sharpness and detail of the image, often using techniques like edge detection and sharpening filters. Over-sharpening can lead to artifacts, so a careful balance is needed.

- Tone Mapping: This step adjusts the dynamic range of the image, mapping the wide range of brightness levels captured by the sensor to the limited range that can be displayed on the screen. This is essential for preserving detail in both highlights and shadows.

- Image Compression: The final stage compresses the image into a format suitable for storage and sharing, such as JPEG or HEIF. Compression algorithms affect the file size and quality of the image.

Comparison of ISP Pipelines Across Manufacturers

Different smartphone manufacturers employ varying ISP pipelines, reflecting their unique approaches to image processing. For example, Google’s Pixel phones are known for their computational photography capabilities, relying heavily on sophisticated software algorithms within the ISP to enhance image quality, particularly in low-light conditions. Apple, on the other hand, often emphasizes hardware-software co-design, integrating advanced ISP hardware with optimized software algorithms. Companies like Samsung and Huawei also have their own proprietary ISP technologies, each focusing on different aspects of image quality and feature sets. These differences result in variations in image style, noise handling, dynamic range, and overall image quality, contributing to the diverse photographic experiences offered by different smartphone brands. A direct comparison requires detailed technical specifications which are often proprietary and not publicly available.

Image Enhancement Algorithms

Smartphone cameras rely heavily on sophisticated image enhancement algorithms to produce high-quality photos and videos, compensating for limitations in the sensor and capturing conditions. These algorithms process the raw image data from the sensor, applying various techniques to improve aspects like sharpness, contrast, and color accuracy, ultimately shaping the final image perceived by the user.

Image enhancement algorithms work by manipulating the pixel values of the raw image data. They typically operate in the digital domain, transforming the numerical representation of the image to achieve the desired improvements. The effectiveness of these algorithms depends on factors like the computational power of the smartphone processor and the specific characteristics of the image sensor. Furthermore, the algorithms often need to be optimized for different scenarios, such as low-light conditions or fast-moving subjects.

Sharpening Algorithms

Sharpening algorithms aim to increase the perceived sharpness of an image by enhancing edges and details. A common technique involves using high-pass filtering, which emphasizes high-frequency components in the image, effectively highlighting transitions between different regions. This results in a crisper, more defined image. Another approach involves unsharp masking, which subtracts a blurred version of the image from the original, accentuating the differences and making edges appear more prominent. The level of sharpening applied is usually adjustable, allowing users to fine-tune the effect to their preference. Over-sharpening can lead to artifacts like halos around edges.

Contrast Enhancement Algorithms

Contrast enhancement algorithms adjust the dynamic range of the image, increasing the difference between the darkest and brightest areas. This improves the overall visual impact by making details in both shadows and highlights more visible. Common methods include histogram equalization, which redistributes the pixel intensities to cover the entire available range, and contrast stretching, which expands the range of pixel values to occupy a larger portion of the dynamic range. These techniques can make images appear more vibrant and detailed, but excessive contrast enhancement can lead to clipping (loss of detail in highlights or shadows).

Color Correction Algorithms

Color correction algorithms aim to ensure that colors in the image are accurate and consistent. These algorithms often compensate for imperfections in the sensor, such as color casts or variations in color sensitivity across different wavelengths. White balance correction is a crucial aspect, adjusting the color temperature to achieve a neutral white point. Other techniques address color fringing, chromatic aberration, and other color-related artifacts. Accurate color correction ensures that the image faithfully represents the colors of the scene, enhancing the realism and visual appeal.

Hypothetical Low-Light Enhancement Algorithm

A hypothetical low-light enhancement algorithm could utilize a combination of techniques to improve image quality in challenging lighting conditions. Firstly, it could employ advanced noise reduction techniques specifically tailored for low-light scenarios, possibly involving sophisticated denoising algorithms that preserve fine details while effectively removing noise. Secondly, it could incorporate multi-frame processing, combining multiple images captured in quick succession to reduce noise and improve signal-to-noise ratio. Thirdly, the algorithm could employ advanced tone mapping techniques to effectively expand the dynamic range and enhance details in both shadows and highlights without sacrificing the overall image quality. Finally, it could incorporate AI-based techniques to intelligently enhance specific areas of the image based on scene understanding, such as identifying faces and enhancing them separately. This multi-faceted approach could significantly improve the quality of low-light images compared to simpler methods.

Noise Reduction Techniques: The Role Of Image Processing In Smartphone Camera Sensors

Digital noise in smartphone camera images is a common issue, significantly impacting image quality. It manifests as unwanted graininess or speckles, often more pronounced in low-light conditions. Effective noise reduction is crucial for delivering clean, visually appealing images, and various techniques are employed to mitigate this problem. These techniques aim to distinguish actual image detail from random noise patterns.

Noise reduction techniques in smartphone cameras generally fall into two categories: spatial and temporal methods. Spatial methods analyze the image itself to identify and reduce noise, while temporal methods leverage information from multiple frames to achieve noise reduction. The choice of method, or a combination thereof, depends on factors such as the sensor’s characteristics, processing power available, and the desired balance between noise reduction and detail preservation.

Spatial Noise Reduction Techniques

Spatial domain noise reduction techniques operate directly on the image data. These methods typically involve smoothing algorithms that average pixel values within a local neighborhood. This averaging process reduces the impact of random noise, but it can also blur fine details. The effectiveness of spatial methods varies considerably depending on the noise level and the chosen algorithm. A simple example is a mean filter, where the value of each pixel is replaced by the average of its neighboring pixels. More sophisticated techniques, such as median filtering and bilateral filtering, are designed to better preserve edges and details while still effectively reducing noise. Median filtering replaces each pixel with the median value of its neighbors, robustly removing impulsive noise (salt-and-pepper noise), while bilateral filtering considers both spatial distance and intensity difference when averaging pixel values, allowing for better edge preservation.

Temporal Noise Reduction Techniques

Temporal noise reduction leverages multiple frames captured in quick succession. By comparing and averaging pixel values across these frames, noise – which is typically random and varies between frames – can be significantly reduced. This is particularly effective in low-light conditions where noise is more prominent. A common approach is multi-frame averaging, where the corresponding pixels in multiple frames are averaged. More advanced techniques, such as motion-compensated temporal filtering, account for movement between frames to avoid blurring moving objects. The effectiveness of temporal noise reduction depends on the stability of the scene and the camera’s ability to capture frames quickly and accurately. For instance, in a stable scene with minimal motion, temporal noise reduction can significantly improve image quality. However, in dynamic scenes with significant motion, motion blur can become a problem if the motion compensation is not accurate enough.

Comparison of Noise Reduction Methods in Different Lighting Conditions

In bright light conditions, noise is typically less of a concern, and aggressive noise reduction algorithms may not be necessary. In such cases, simpler spatial methods might suffice, prioritizing detail preservation over extreme noise reduction. However, in low-light scenarios, noise becomes significantly more prominent. Here, temporal methods often prove superior, as they can effectively reduce noise by leveraging information from multiple frames. A combination of spatial and temporal methods is often used for optimal results, where spatial filtering refines the result obtained from temporal averaging. The choice of algorithm also depends on the type of noise. For example, median filtering is particularly effective against salt-and-pepper noise, while bilateral filtering is better suited for Gaussian noise, which is the most common type of noise in images.

Impact of Noise Reduction Algorithms on Image Detail

Noise reduction algorithms inevitably involve a trade-off between noise reduction and detail preservation. Aggressive noise reduction can lead to a loss of fine details and a reduction in image sharpness. This is particularly noticeable in areas with fine textures or sharp edges. Conversely, insufficient noise reduction leaves the image grainy and noisy. Therefore, the choice of noise reduction algorithm and its parameters are crucial in achieving a balance between noise reduction and detail preservation. For example, a strong mean filter will significantly reduce noise but will also blur the image significantly. A more sophisticated algorithm, such as a bilateral filter, will achieve comparable noise reduction while preserving more detail. The optimal balance depends on the specific image and the user’s preferences.

Image Stabilization

Image stabilization is a crucial feature in modern smartphone cameras, significantly improving image quality, especially in challenging shooting conditions. It compensates for camera shake, ensuring sharper and clearer photos and videos, even when the user’s hand isn’t perfectly steady. This is achieved through a combination of hardware and software techniques, each with its own strengths and weaknesses.

Optical Image Stabilization (OIS) and Electronic Image Stabilization (EIS) are the two primary methods employed.

Optical Image Stabilization (OIS)

OIS uses a tiny gyroscope within the camera module to detect movement. This sensor then signals a tiny motor to adjust the position of the image sensor or lens, counteracting the camera shake in real-time. This physical adjustment minimizes blurring before the image is even captured. High-end smartphones often utilize OIS, especially in their main cameras.

Electronic Image Stabilization (EIS)

EIS, in contrast, is a software-based solution. It analyzes a series of frames captured by the camera sensor and digitally crops and combines them to create a stabilized image. This cropping process effectively reduces the field of view, so EIS often results in a lower resolution image compared to OIS. However, it’s a more cost-effective solution and can be implemented across all cameras in a smartphone.

Comparison of OIS and EIS

The choice between OIS and EIS involves a trade-off. OIS provides superior stabilization, resulting in sharper images with less image degradation, particularly for video recording. However, it’s more expensive to implement and requires more physical space within the device. EIS, being software-based, is more affordable and can be implemented on lower-cost smartphones. However, it often results in a slight reduction in resolution and can struggle with significant camera shake. Many smartphones utilize a combination of both OIS and EIS for optimal results, leveraging the strengths of each technology.

Image Stabilization Algorithms

The effectiveness of image stabilization relies heavily on sophisticated algorithms. These algorithms analyze the sensor data from the gyroscope (in OIS) or the image sequence (in EIS) to identify and quantify the movement. Advanced algorithms use sophisticated motion models to predict future movement and compensate accordingly. For EIS, algorithms might use techniques like frame interpolation or motion estimation to create a stabilized sequence. The goal is to minimize the effect of camera shake on the final image, resulting in improved sharpness and reduced blur. The impact on image sharpness is significant; without stabilization, even slight hand movement can lead to blurry images, especially in low-light conditions. Stabilization algorithms dramatically improve the success rate of capturing sharp images in these situations.

High Dynamic Range (HDR) Imaging

High Dynamic Range (HDR) imaging is a crucial feature in modern smartphone cameras, significantly enhancing the quality of photos by capturing a wider range of brightness levels than traditional methods. This allows for more detail in both the highlights and shadows of an image, resulting in a more realistic and visually appealing final product. HDR imaging cleverly overcomes the limitations of standard image sensors which struggle to capture the full dynamic range of a scene, particularly when dealing with high contrast situations like sunsets or brightly lit interiors.

HDR image processing involves combining multiple exposures of the same scene, each with a different exposure time. This technique allows the camera to capture data from both the bright and dark areas of the scene, avoiding clipping in the highlights and loss of detail in the shadows. The challenge lies in seamlessly merging these different exposures into a single, natural-looking image.

HDR Image Capture and Processing

The process of capturing and processing HDR images on smartphones involves several key steps. These steps work together to create an image that accurately represents the dynamic range of the original scene, maintaining detail across a wide range of brightness levels. This is achieved through a combination of hardware and sophisticated software algorithms.

- Multiple Exposure Capture: The smartphone camera rapidly captures a series of images of the same scene, each with a different exposure setting (e.g., underexposed, correctly exposed, and overexposed). This is typically done in quick succession, often unnoticed by the user.

- Alignment: Slight movements of the camera between shots can lead to misalignment. Sophisticated algorithms are used to precisely align the multiple exposures, correcting for any camera shake or subject movement. This is crucial for seamless merging.

- Tone Mapping: This is a critical step where the different exposures are combined. Advanced algorithms analyze the brightness and color information from each exposure, selecting the best detail from each to create a final image with a wider dynamic range than any single exposure could capture. This involves complex calculations to map the wide range of captured tones into the limited range that can be displayed on a screen or printed.

- Noise Reduction: Because multiple exposures are used, HDR images can be susceptible to increased noise. Specialized noise reduction techniques are applied to minimize this noise while preserving image detail.

- Color Correction: The final step often involves subtle color adjustments to ensure the resulting HDR image appears natural and balanced, further enhancing the overall quality and realism.

Challenges in HDR Imaging and Their Solutions

Several challenges exist in creating high-quality HDR images on smartphones. These challenges are largely related to computational complexity, the limitations of sensor technology, and the need to achieve real-time processing.

- Computational Cost: Processing multiple exposures and applying complex algorithms requires significant computational power. Smartphones address this through optimized algorithms and specialized hardware, such as dedicated image processing units (IPUs).

- Ghosting Artifacts: Movement between exposures can cause “ghosting” artifacts – blurry or duplicated elements in the final image. Sophisticated alignment algorithms minimize this, and techniques like temporal filtering can help reduce the impact of minor movements.

- Real-time Processing: Users expect near-instantaneous results. Advanced hardware and software optimizations are crucial for achieving real-time HDR processing on smartphones, making the technology seamlessly integrated into the user experience.

- Power Consumption: HDR processing is computationally intensive and can drain the phone’s battery. Efficient algorithms and power management techniques are employed to minimize power consumption.

Low-Light Photography Techniques

Low-light photography presents significant challenges for smartphone cameras, primarily due to the limited amount of light available to the sensor. This results in images that are often noisy, grainy, and lack detail. However, advancements in image processing have dramatically improved the quality of low-light images captured by smartphones, allowing for surprisingly good results even in very dark environments. These improvements leverage a combination of hardware and software techniques.

The role of image processing in enhancing low-light images is crucial. Raw sensor data acquired in low light is inherently noisy and lacks sufficient detail. Sophisticated algorithms are employed to mitigate this noise, amplify the signal, and reconstruct a clearer, more detailed image. These algorithms are computationally intensive and rely heavily on the processing power of the smartphone’s processor.

Noise Reduction Techniques in Low-Light Photography

Several noise reduction techniques are implemented in modern smartphone cameras to combat the graininess inherent in low-light images. These techniques range from simple averaging filters to more complex algorithms that analyze the image’s texture and structure to differentiate between actual image details and noise. Spatial filtering techniques, such as bilateral filtering and non-local means (NLM) filtering, are commonly used to reduce noise while preserving edges and details. These algorithms work by averaging pixel values, but they intelligently weigh the contribution of each pixel based on its similarity to its neighbors. This ensures that noise is reduced while important image features are retained. Furthermore, temporal noise reduction techniques, which average multiple frames taken in quick succession, can further improve the signal-to-noise ratio. This approach is particularly effective in reducing noise caused by the sensor’s readout process.

Signal Amplification and Detail Enhancement

In low-light conditions, the signal from the sensor is weak. Image processing algorithms play a crucial role in amplifying this weak signal to improve image brightness. However, simply amplifying the signal also amplifies the noise, so this process must be carefully balanced. Sophisticated algorithms are used to selectively amplify the signal in regions of interest, while minimizing noise amplification in other areas. Furthermore, techniques like wavelet denoising and other advanced algorithms are employed to enhance fine details in the image, making them more visible even in low light. For instance, a wavelet transform decomposes the image into different frequency components, allowing for targeted noise reduction in high-frequency components while preserving details in low-frequency components.

Comparison of Low-Light Enhancement Approaches

Different approaches to low-light image enhancement offer varying trade-offs between noise reduction, detail preservation, and computational cost. Simple averaging filters are computationally inexpensive but may result in significant blurring of details. More advanced algorithms, such as NLM filtering and wavelet denoising, offer better noise reduction and detail preservation but are more computationally intensive. The choice of algorithm depends on the specific requirements of the application and the available processing power. For example, a computationally less demanding algorithm might be chosen for real-time applications, such as video recording, whereas a more computationally intensive algorithm might be preferred for still images where processing time is less critical. The latest smartphones often employ a combination of these techniques to achieve optimal results.

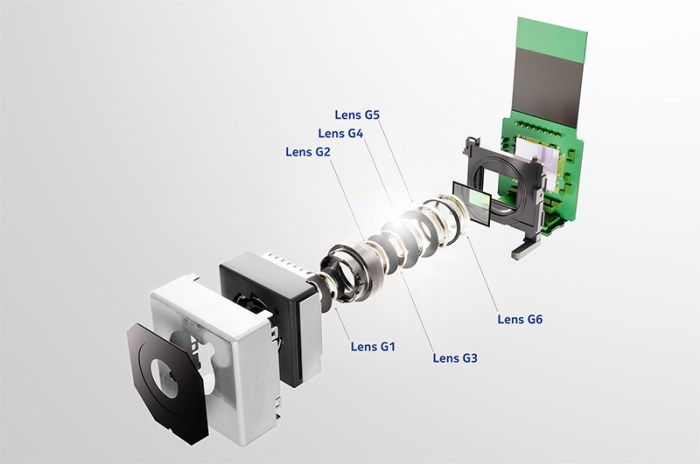

Depth Sensing and Bokeh Effects

Smartphone cameras have increasingly incorporated depth sensing capabilities, significantly enhancing their photographic versatility. This technology allows for the creation of sophisticated effects, most notably the aesthetically pleasing bokeh effect, which blurs the background of an image, drawing attention to the subject in the foreground. This is achieved through the precise measurement and interpretation of distances between the camera and various points within the scene.

Depth sensing in smartphones relies on several methods, each with its strengths and weaknesses. Stereo vision, for instance, uses two cameras positioned slightly apart, mimicking human binocular vision. By comparing the images from both lenses, the device calculates depth information based on the parallax—the apparent shift in an object’s position as seen from different viewpoints. Time-of-flight (ToF) sensors, on the other hand, emit infrared light pulses and measure the time it takes for the light to reflect back, directly determining the distance to objects. Structured light techniques project a pattern of light onto the scene and analyze the distortion of that pattern to infer depth. Finally, monocular depth estimation uses a single camera and sophisticated algorithms to analyze image features and estimate depth from a single perspective. Each method presents a trade-off between accuracy, cost, and power consumption.

Depth Map Generation

The core of depth-based image processing lies in the creation of a depth map. This is a representation of the scene where each pixel is assigned a value corresponding to its distance from the camera. The accuracy of this map directly impacts the quality of the resulting bokeh effect. The process begins with the raw data captured by the depth sensor—this could be a pair of stereo images, ToF sensor readings, or a structured light pattern. Sophisticated algorithms then process this data to generate a detailed depth map. This often involves complex calculations, image registration (aligning images from multiple sensors), and noise reduction to ensure a smooth and accurate depth representation. The resulting depth map is a grayscale image where brighter pixels represent closer objects and darker pixels represent farther objects.

Bokeh Effect Generation Using Depth Maps, The role of image processing in smartphone camera sensors

Once a depth map is generated, it’s used to create the bokeh effect. This involves several steps. First, the depth map is segmented to identify the foreground (in-focus) and background (out-of-focus) regions. Pixels corresponding to the background are identified based on their depth values. Then, a blurring algorithm is applied selectively to the background pixels. The strength of the blur is typically adjusted based on the distance from the camera; objects further away are blurred more strongly. This creates the characteristic gradual blurring effect associated with bokeh. Finally, the blurred background is recombined with the sharp foreground to produce the final image with a convincing bokeh effect. Different blurring algorithms, such as Gaussian blur or bilateral filtering, can be used to achieve various aesthetic qualities in the bokeh. The choice of algorithm often influences the smoothness and natural appearance of the blur. Some algorithms even attempt to simulate the characteristic circular highlights (bokeh balls) produced by certain lens designs.

Examples of Bokeh Effect Implementation

Consider a portrait photo taken with a smartphone featuring depth sensing. The depth map would clearly delineate the subject (person) in the foreground and the background (e.g., a landscape or cityscape). The image processing pipeline would then apply a stronger blur to the distant background elements, creating a shallow depth of field effect. The level of blur applied is crucial; too much blur can appear unnatural, while too little fails to create a noticeable bokeh effect. The choice of blurring algorithm also impacts the final aesthetic—a Gaussian blur might produce a softer, more diffused effect, while a bilateral filter could retain more detail in the blurred regions. High-end smartphones often use sophisticated algorithms that dynamically adjust the blur intensity and type based on the scene content and depth map characteristics, resulting in a more natural and visually appealing bokeh.

Artificial Intelligence (AI) in Image Processing

Artificial intelligence has revolutionized smartphone photography, moving beyond basic image enhancement to offer sophisticated features and a more intuitive user experience. AI algorithms analyze images in real-time, making adjustments and applying effects that were previously impossible without significant user intervention. This sophisticated processing power allows for dramatic improvements in image quality and creative possibilities.

AI’s role in smartphone image processing spans numerous aspects, from initial image capture to final output. It empowers features that enhance clarity, detail, and overall aesthetic appeal, often adapting to different shooting conditions and user preferences. This adaptability is a key differentiator between traditional image processing and AI-powered solutions.

Scene Detection and Object Recognition

AI algorithms excel at scene detection, identifying the subject matter and environment of a photograph (e.g., portrait, landscape, sports event, food). This information is then used to automatically adjust camera settings such as exposure, white balance, and focus, optimizing the image for the specific scene. Object recognition allows for further enhancements. For instance, AI can detect faces and automatically adjust focus and exposure for optimal portrait shots, ensuring sharp details and natural skin tones. Similarly, it can identify objects like flowers or animals, applying specific filters or enhancements to highlight their features. Consider the example of Google’s Pixel phones; their computational photography capabilities leverage AI to dramatically improve low-light performance and detail in images.

AI-Powered Image Enhancement

Beyond basic adjustments, AI drives more sophisticated image enhancements. For example, AI can intelligently upscale lower-resolution images, filling in missing details to create a higher-resolution output with minimal artifacts. This is particularly useful for older photos or images taken with less powerful cameras. AI can also be used for image restoration, removing blemishes, scratches, or noise from photos, improving their overall quality. The results often surpass the capabilities of manual editing, offering a level of precision and efficiency that is unmatched. For instance, Adobe Photoshop’s AI-powered features demonstrate this capability effectively.

Advantages and Limitations of AI in Smartphone Cameras

The advantages of AI in smartphone cameras are numerous. AI enables faster and more accurate image processing, leading to improved image quality and a more user-friendly experience. It automates tasks that previously required significant user expertise, making high-quality photography accessible to a wider audience. AI-powered features also allow for greater creative control, offering a range of sophisticated effects and adjustments that are easily applied with minimal effort.

However, AI also has limitations. The computational demands of AI algorithms can drain battery life and generate heat. There can also be issues with accuracy, particularly in complex or unusual scenes. Furthermore, the reliance on AI can sometimes lead to a loss of creative control for users who prefer more manual adjustments. Finally, concerns about data privacy arise due to the need for AI systems to process and analyze large amounts of image data. Balancing these advantages and limitations is a crucial ongoing challenge in the development of AI-powered smartphone cameras.

In conclusion, the journey from raw sensor data to the vibrant images we share on social media is a complex and fascinating process. The sophisticated interplay of image sensor technology, computational photography techniques, and advanced algorithms has transformed the smartphone camera into a powerful imaging tool. As technology continues to evolve, we can anticipate even more impressive advancements in the years to come, promising even higher quality and more innovative photographic capabilities in our pockets.

FAQ Compilation

What is the difference between CMOS and CCD image sensors?

CMOS sensors are now the standard in smartphones due to their lower power consumption and ability to integrate processing directly onto the chip. CCD sensors, while offering superior image quality in some situations, are less energy efficient and more expensive.

How does HDR improve image quality?

HDR (High Dynamic Range) imaging captures multiple exposures of a scene with varying brightness levels. These exposures are then combined to create a single image with a wider range of tones, resulting in more detail in both highlights and shadows.

What is the role of AI in smartphone cameras?

AI plays an increasingly vital role, enabling features like scene detection, object recognition, and automatic subject tracking. AI algorithms can also enhance image quality through advanced noise reduction and computational photography techniques.

Can I improve my smartphone photos without using computational photography features?

Yes, using good lighting, proper composition, and understanding basic photographic principles will significantly enhance your images. However, computational photography features can help overcome limitations of the hardware and enhance images further.

Learn about more about the process of best smartwatch for iphone with long battery life and fitness tracking in the field.