How to improve Google Assistant’s voice recognition accuracy is a question many users grapple with. Frustration with misinterpretations and missed commands is common, stemming from factors ranging from noisy environments to individual speech patterns. This guide explores practical strategies to enhance Google Assistant’s understanding, covering microphone optimization, speech techniques, and leveraging built-in settings to achieve more accurate voice recognition.

From optimizing microphone placement and understanding the impact of different microphone types to mastering clear articulation and utilizing Google Assistant’s adjustable sensitivity settings, we’ll delve into a comprehensive approach. We will also address challenges posed by background noise, accents, and dialects, providing solutions and workarounds to overcome these obstacles. Finally, we’ll explore alternative input methods and the importance of user feedback in continuously improving the Google Assistant experience.

Understanding Google Assistant’s Voice Recognition Challenges: How To Improve Google Assistant’s Voice Recognition Accuracy

Google Assistant, while remarkably advanced, isn’t perfect. Its voice recognition capabilities, while impressive, are still susceptible to various factors that can significantly impact accuracy. Understanding these challenges is crucial for optimizing its performance and managing expectations.

Google Assistant’s voice recognition, like all similar technologies, relies on complex algorithms to translate spoken words into text. This process is surprisingly intricate and prone to errors under certain conditions. Several factors contribute to these inaccuracies, and a grasp of these limitations is essential for improving the overall user experience.

Common Scenarios Leading to Voice Recognition Errors

Several common situations frequently cause Google Assistant to misinterpret speech. These scenarios highlight the limitations of current technology and offer insights into potential areas for improvement. For example, noisy environments, such as crowded restaurants or bustling streets, often lead to significant errors. Similarly, accents and dialects can present considerable challenges, as the system may not be adequately trained on the nuances of specific speech patterns. Finally, poor articulation or mumbled speech, even in quiet environments, can hinder accurate recognition. These scenarios demonstrate the system’s sensitivity to various contextual factors.

Factors Influencing Voice Recognition Accuracy

Background noise is a major hurdle for accurate voice recognition. The system struggles to isolate the user’s voice from ambient sounds, leading to misinterpretations. Consider the difference between giving a command in a quiet room versus a busy coffee shop; the latter scenario significantly increases the chance of errors. Similarly, accents and dialects pose a significant challenge. The algorithms are trained on vast datasets, but these datasets might not represent the full diversity of human speech. This results in reduced accuracy for users with accents not prominently featured in the training data. Finally, speech clarity plays a crucial role. Mumbling, speaking too quickly, or having a quiet voice can all contribute to recognition errors.

Technical Limitations of Current Voice Recognition Technology

Current voice recognition technology relies heavily on statistical models trained on massive datasets of audio recordings. While these models have improved dramatically in recent years, they still face inherent limitations. One major challenge is the ambiguity of human speech. Homophones (words that sound alike but have different meanings, like “there,” “their,” and “they’re”) often lead to errors. Furthermore, contextual understanding remains a significant hurdle. The system struggles to interpret the intended meaning when the speech lacks sufficient clarity or is grammatically incorrect. These technical challenges highlight the ongoing research and development needed to further enhance voice recognition accuracy.

Optimizing User Speech Patterns

Improving Google Assistant’s voice recognition accuracy significantly relies on how you interact with it. Speaking clearly and concisely, while paying attention to pronunciation and articulation, dramatically enhances the system’s ability to understand your requests. Even small adjustments in your speaking style can lead to noticeable improvements in accuracy.

Speaking to Google Assistant is similar to speaking to a person who’s learning a new language – clear and precise communication is key. Ambiguity, mumbled words, or rapid speech can easily confuse the system. By adopting some simple best practices, you can help Google Assistant understand you better and provide more accurate results.

Best Practices for Clear and Concise Speech

Clear and concise speech minimizes the chances of misinterpretation by Google Assistant. Avoid using slang, jargon, or overly complex sentence structures. Instead, opt for simple, direct phrasing. For example, instead of saying “Hey Google, play that really cool song I listened to yesterday, the one with the catchy beat,” try “Hey Google, play the song I listened to yesterday.” The more concise your request, the easier it is for the Assistant to understand. Furthermore, speaking at a natural pace, neither too fast nor too slow, helps ensure that each word is clearly processed. Pausing slightly between words or phrases can also improve recognition accuracy.

Pronunciation and Articulation’s Impact on Voice Recognition

Precise pronunciation and clear articulation are crucial for accurate voice recognition. The way you pronounce words directly affects the system’s ability to interpret your speech. Slurring words together, mumbling, or speaking with a strong accent can significantly hinder the Assistant’s understanding. For instance, mispronouncing “weather” as “wedder” could lead to the Assistant failing to understand your request for a weather update. Similarly, unclear articulation of consonants can make it difficult for the system to distinguish between similar-sounding words. Paying close attention to your pronunciation and ensuring each syllable is clearly articulated greatly improves the accuracy of voice recognition.

Common Pronunciation Errors and Corrective Measures

Understanding common pronunciation errors and how to correct them can drastically improve your interaction with Google Assistant. Here are some examples:

- Problem: Substituting similar-sounding words (e.g., “there” for “their”).

Solution: Pay attention to the subtle differences in vowel and consonant sounds. Practice pronouncing these words individually to reinforce correct articulation. - Problem: Mumbling or speaking too quickly.

Solution: Consciously slow down your speech and enunciate each word clearly. Practice speaking in a deliberate manner. - Problem: Swallowing consonants or vowels at the end of words.

Solution: Focus on pronouncing the final sounds of words fully and distinctly. Practice exaggerating these sounds slightly until they become natural. - Problem: Using regional accents or dialects.

Solution: While Google Assistant is designed to handle various accents, speaking in a clear, standard pronunciation can improve accuracy. Consider practicing standard pronunciation for common words and phrases. - Problem: Using filler words excessively (e.g., “um,” “uh”).

Solution: Be mindful of filler words and strive to speak more concisely. Practice speaking without using these words.

Leveraging Google Assistant Settings

Google Assistant’s voice recognition accuracy is significantly impacted by its settings. Optimizing these settings can dramatically improve its ability to understand your commands and queries, leading to a smoother and more efficient user experience. Careful consideration of these parameters can make a considerable difference in the overall performance.

Several key settings within the Google Assistant app influence voice recognition performance. These settings allow you to tailor the Assistant’s behavior to your specific voice characteristics and environment, ultimately enhancing accuracy and reducing frustrating misunderstandings.

Investigate the pros of accepting comparing xbox cloud gaming vs geforce now on android in your business strategies.

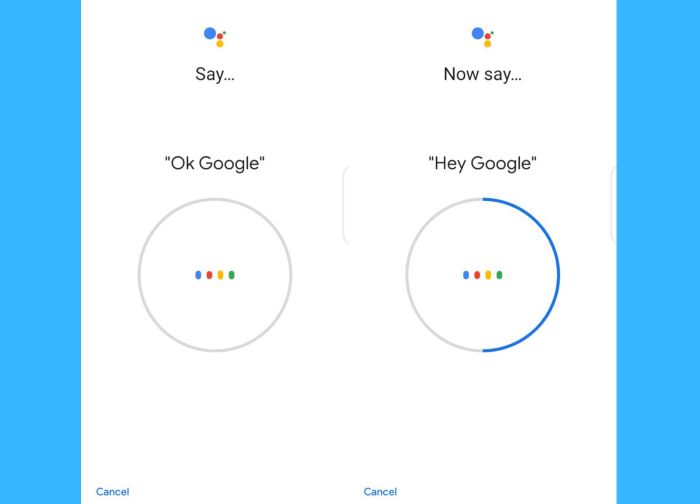

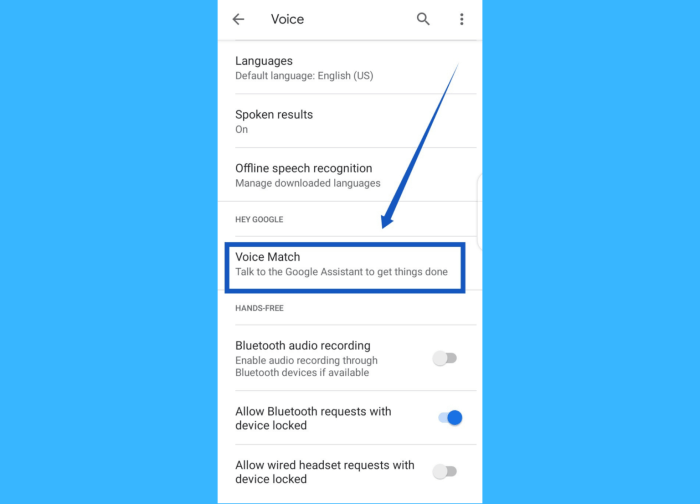

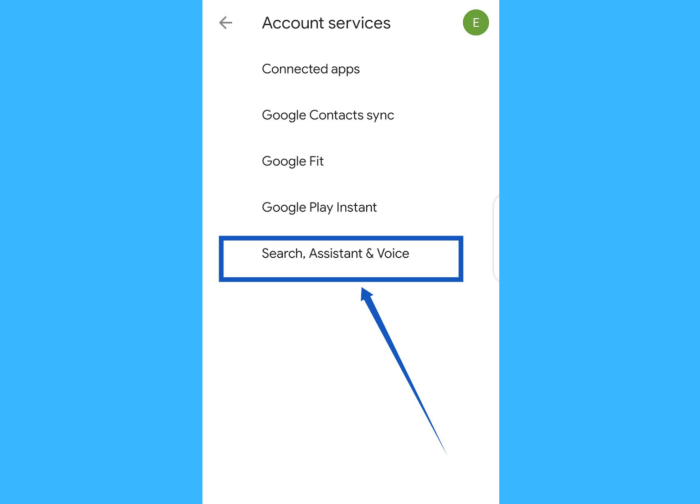

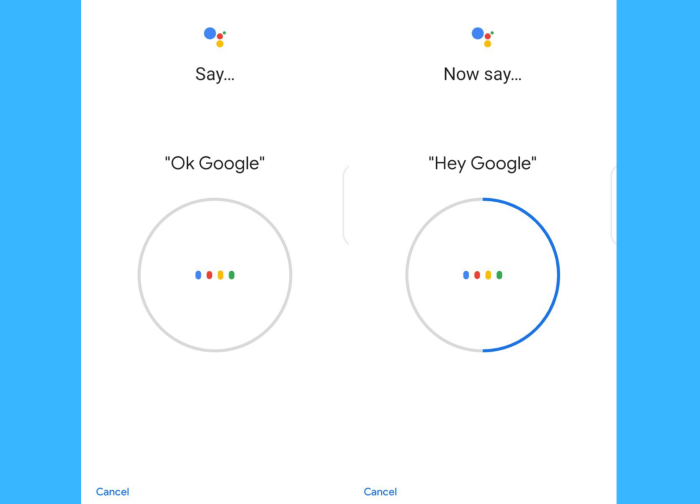

Voice Match and Personalization

This feature allows Google Assistant to learn your unique voice, leading to improved recognition accuracy over time. Enabling Voice Match ensures that only your voice triggers the Assistant, preventing accidental activations and improving the quality of voice recognition for your specific vocal patterns. To enable, open the Google Assistant app, navigate to settings, and locate the “Voice Match” option. Ensure it is toggled on. The more you use the Assistant with Voice Match enabled, the better it will become at recognizing your voice. Regular use helps the system adapt to nuances and variations in your speech.

Microphone Sensitivity

Adjusting the microphone sensitivity is crucial for optimal performance. A setting that is too low might fail to pick up your voice, especially in noisy environments. Conversely, a setting that is too high might be overly sensitive to background noise, resulting in inaccurate transcriptions. The ideal sensitivity level depends on your environment and the volume of your voice. Within the Google Assistant settings, look for a “Microphone Sensitivity” or similar option. Experiment with different levels to find the sweet spot for your specific needs. Start with the default setting and adjust incrementally until you find the best balance between sensitivity and noise reduction. Testing different sensitivity levels while speaking at your typical volume in various environments will yield the best result.

Background Noise Reduction

Many Google Assistant settings aim to minimize the impact of background noise. Activating these features can significantly improve the accuracy of voice recognition. This feature utilizes advanced algorithms to filter out extraneous sounds, allowing the Assistant to focus on your voice. It’s particularly beneficial in environments with significant background noise, such as busy offices or public transportation. The specific name of this setting may vary slightly depending on your device and Assistant version; however, it is typically located within the settings menu under a section related to voice recognition or audio. Enabling this option is highly recommended for users who frequently interact with the Assistant in noisy environments.

Alternative Voice Input Methods

While not directly related to voice recognition itself, exploring alternative input methods can be beneficial if voice recognition remains problematic. These alternative methods may include text input, typing commands, or using a Bluetooth keyboard. These options provide fallback mechanisms for when voice recognition struggles. They also can offer an alternative approach for users who prefer not to use their voice or for situations where voice input is inconvenient or impossible. These options are typically found within the Google Assistant app’s main interface, offering convenient and immediate access.

Utilizing Feedback Mechanisms

Providing feedback on Google Assistant’s voice recognition accuracy is crucial for its ongoing improvement. Your experiences directly influence the algorithms that power this technology, shaping its ability to understand and respond to diverse accents, speech patterns, and background noises. By actively participating in the feedback process, you contribute to a more accurate and reliable virtual assistant for everyone.

Google has established several mechanisms for users to report voice recognition errors. This feedback is invaluable, as it allows Google’s engineers to identify recurring issues and prioritize improvements to the underlying algorithms. The more detailed and constructive your feedback, the more effective it will be in guiding these improvements.

Feedback Submission Process

Reporting a voice recognition error is generally straightforward. After interacting with Google Assistant and encountering an inaccuracy, look for an option within the Assistant’s interface to report the issue. This often involves selecting a button or menu item related to feedback or reporting problems. You will typically be prompted to provide details about the interaction, including the phrase spoken and how the Assistant interpreted it. You can also add context, such as the environment (noisy or quiet) and your accent. Google may also request access to a recording of the interaction, which helps them analyze the specific acoustic characteristics that led to the error. The exact process might vary slightly depending on the device and Google Assistant app version you’re using. Always refer to the in-app help resources for the most up-to-date instructions.

User Feedback’s Contribution to Performance Improvement

The collective feedback from millions of users forms a massive dataset that Google uses to train and refine its voice recognition models. This data allows engineers to identify patterns in errors, such as difficulties understanding certain words or phrases in specific accents or noisy environments. This information is then used to adjust the algorithms, improving their ability to correctly interpret a wider range of speech inputs. For example, if many users report difficulty with the Assistant understanding a particular regional dialect, Google can focus on improving its recognition capabilities for that specific dialect. This iterative process of collecting feedback, analyzing data, and refining algorithms is central to Google Assistant’s ongoing development and improvement.

Impact of Detailed and Constructive Feedback, How to improve Google Assistant’s voice recognition accuracy

Providing detailed and constructive feedback significantly increases its value to Google. Simply stating “Google Assistant didn’t understand me” is less helpful than providing specific details like: “I said ‘set a timer for 25 minutes,’ but the Assistant understood it as ‘set a timer for 25 minutes to the next Tuesday’. I was speaking in a moderately noisy coffee shop with a slight Southern accent.” The more information you provide—including the exact phrase spoken, the context, and the specific error—the better Google can understand the nature of the problem and develop targeted solutions. Including information about the environment (background noise levels, location) and your accent can also help engineers tailor the system to diverse usage scenarios. This level of detail allows for more efficient algorithm improvements and ultimately leads to a more universally accurate Google Assistant experience for all users.

Improving Google Assistant’s voice recognition accuracy isn’t about a single fix, but a multifaceted approach. By understanding the technical limitations, optimizing microphone input, refining your speech patterns, and effectively utilizing Google Assistant’s settings, you can significantly enhance its performance. Remember to leverage feedback mechanisms to contribute to ongoing improvements and explore alternative input methods when needed. Through a combination of technical adjustments and user awareness, a more seamless and accurate interaction with Google Assistant is achievable.

Frequently Asked Questions

Does Google Assistant learn my voice over time?

Yes, Google Assistant’s voice recognition improves with continued use. The more you interact with it, the better it adapts to your specific voice and speech patterns.

Can I train Google Assistant to recognize my accent better?

While direct training isn’t available, consistent use and providing feedback on misinterpretations helps Google Assistant adapt to your accent over time.

What if my Google Assistant still doesn’t understand me after trying these tips?

Consider contacting Google support for further assistance or checking for software updates. Hardware issues with your device’s microphone should also be investigated.